The proposed ban on offensive autonomous weapons is unrealistic and dangerous

August 5, 2015

AI-controlled armed, autonomous UAVs may take over when things start to happen faster than human thought in future wars. From Call of Duty Black Ops 2 (credit: Activision Publishing)

The open letter from the Future of Life Institute (FLI) calling for a “ban on offensive autonomous weapons” is as unrealistic as the broad relinquishment of nuclear weapons would have been at the height of the cold war.

A treaty or international agreement banning the development of artificially intelligent robotic drones for military use would not be effective. It would be impossible to completely stop nations from secretly working on these technologies out of fear that other nations and non-state entities are doing the same.

It’s also not rational to assume that terrorists or a mentally ill lone wolf attacker would respect such an agreement.

Dangers of crippling the military

Future systems designed for civilian purposes might be convertible to weapons to be used in ways that are not predictable or foreseeable today.

For example, a civilian drone could be electronically modified by another nation or terrorist organization to be capable of making better tactical decisions than human-controlled systems.

If continued improvements are made to civilian AI and robotic drone systems, but the military is banned from doing the same, it could lead to a situation where a modified civilian drone would outperform far superior military equipment controlled by humans in battle.

Even if superior weapon systems on an F-22 or a manned drone could outperform a weaponized civilian drone, it is conceivable that a less maneuverable or mechanically capable system with intelligent AI enhancements could still win in a specific battle. A drone hacked to have AI could do this by tactically outsmarting the human-controlled, superior fighting system.

Agile tactical systems. Machines excel at making split-second tactical decisions that humans have trouble with. This could lead to a significant disaster if we choose not to develop any systems capable of combating these types of threats.

This is akin to gaming programs, which have already surpassed human ability. Even if the computer starts off a game of chess with fewer pieces than even the best human opponents, the odds are still in its favor.

And tactical decision-making does not require the same level of intelligence that strategic thinking does. So it is conceivable that a smart device would not even require anything close to human level artificial intelligence to perform better than humans in a variety of tactical scenarios — not just the limited split-second combat decisions we use them for today.

Remote-controlled robotic systems. Preventing electronic hacking of U.S. military systems or of targets that pose a significant national security threat is gaining broad acceptance by the political establishment. But an even more dangerous example of this hacking scenario would be a remote-controlled robotic system that is capable of taking over the controls of a traditional manned jet aircraft.

But even if we continue to maintain defenses against electronic hacking of military aircraft, nuclear reactors, or a nuclear submarine, we also have to create defenses against robotic systems that could attempt to infiltrate into and take direct physical control of these systems as well.

Hogwit | Flying Gun: Small, hard-to-detect personal drones like this modified handgun drone — would be extremely difficult to fight against using any weapons system —- unmanned, or being controlled by a human controller.

How would you fight against 50,000 small agile robots invading a village if you only have four or five drones controlled by humans trying to find and shoot them down?

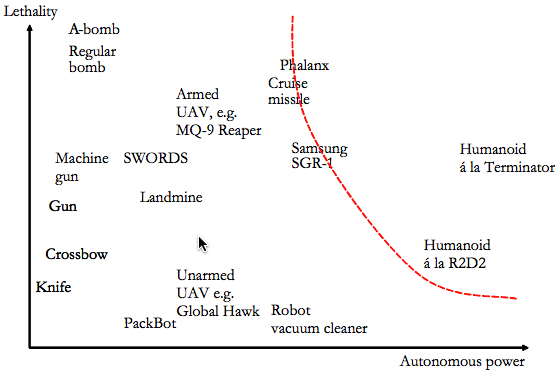

Lethality and autonomous power for a selection of weapons and robots. Autonomous power denotes the amount and level of actions, interactions and decisions the considered artifact is capable of performing on its own. Robots to the right of the dotted line have the ability to kill as a result of complex internal decision processes. (credit: Thomas Hellstrom)

In “On the Moral Responsibility of Military Robots,” Thomas Hellstrom of the Department of Computing Science at Umeå University in Sweden defines an “autonomous agent” as “a system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to effect what it senses in the future.” He defines “autonomous power” as “the amount and level of actions, interactions and decisions an agent is capable of performing on its own.”

Stopping military R&D in autonomous weapons would keep us from being prepared if non-militarized drone systems or something comparable are converted for military use. That’s because it’s much easier and faster to militarize an existing technology than develop one.

Proactive planning. We had planes and tanks several decades before the doctrine to use them effectively was developed. The imagination of military leadership is usually always behind the capabilities of technology, and the bureaucracy of government has a tendency to self censor ideas from people like Billy Mitchell or George Patton until the next war breaks out.

U.S. Army Captain (then 1LT) Sam Wallace with a Land Based Phalanx Weapon System (LPWS) used for detecting and/or destroying incoming rockets, artillery, and mortar rounds in the air before they hit their ground targets (credit: S. Wallace)

I served as an officer in the U.S. Army from 2006 until 2012. From 2009 to 2010, I completed a six-month training class called Captain’s Career Course, which is a course required for Captains in the Army to complete prior to assuming command of a company- sized unit.

During this course, we had a lot of Field Grade and General officers come and lecture our class. One time we had a presentation on the future of Air Defense systems. I remember asking during the Q&A how we would defend against multiple small low-flying robots that would each carry part of a bomb or fissionable material to the target, and then could combine it on the other side to explode.

I did not get an answer, and mostly people just stared at me like I was a weirdo for not only asking that, but for thinking that question up in the first place.

The U.S. should not just hope that everyone plays nice, and does not make these kinds of weapons. Even if we are just developing capabilities through research like DARPA, we should do so.

On my first deployment, I was an officer in a Counter Rocket Artillery and Mortar Unit located on Camp Victory near Baghdad, Iraq. During this deployment we maintained and operated several Land Based Phalanx Weapon Systems (LPWS) that have been designed to shoot down enemy insurgent rockets and mortars. This defensive system can be found on many naval vessels and was specifically converted for use on larger military bases in Iraq and Afghanistan.

This system can be automated, but kind of like the guns on the DMZ in Korea, human authority is still required to authorize the weapon system to fire.

This is important to note because you might only have a few seconds to complete a battle drill in order to successfully shoot down an insurgent rocket about to impact onto a forward operating base. But with training, people can still be effective at completing a battle drill to react to non-AI threats of this nature, even if they only have 10 to 30 seconds to do so.

The human operators do not aim or execute any sort of direct control over the firing of the C-RAM system. The role of the human operators is to act as a final fail-safe in the process by verifying that the target is in fact a rocket or mortar, and that there are no friendly aircraft in the engagement zone. A Human operator just presses the button that gives the authorization to the weapon to track, target, and destroy the incoming projectile.

Even though the machine still does the majority of the work, this kind of limited human involvement in the process starts to reach the limits of what humans can react to in a short time span.

The next generation of threats will make it impossible not to give more autonomy to systems in order to defeat likely enemy threats as AI systems develop.

Rules of engagement

But it’s more than developing countermeasures. We need to give serious thought to the rules of engagement —- how the military should respond to different situations and different AI systems so that there’s at least a base idea about how to react — even if the threat ends up being different.

Consider this scenario: 50 civilian drones have been converted to be autonomous, with remote control and image recognition of specific locations or people for ethnically cleansing a village (not a technically demanding system).

Unfortunately, you don’t have any systems to realistically fight these drones, or even any rules of engagement for situations like that. And if you don’t know how many autonomous drones the enemy has, what do you do? Blow up the village to prevent the drones from spreading to other villages?

Broad relinquishment will almost assure that this scenario or one like it will occur, and we won’t have many options to stop them.

The feared weapons arms race is probably inevitable as a result of the competitive pressures of combat. So let’s not pretend that we can fight future flying robot tanks by using an equine cavalry defense and kumbaya mentality.

Rafael Marketing Ltd | Iron Dome

Scenarios

Here are some other scenarios to consider.

1. High-speed attack

Instead of seconds, things are happening in fractions of a second and you are fighting autonomous intelligent flying machines instead of a simple rocket or mortar. Interactions at human speed would be worthless, even if threat verification and authorization to fire were the human’s only responsibility: only a fully autonomous system would be capable of defeating the threat.

2. Communications loss — where the lines between offensive and defensive blur

The FLI open letter specifically states offensive weapon systems, but there are gray areas for systems that combine defensive and offensive capabilities and for autonomous vs. human control.

Assume an automated drone with AI speech recognition capabilities has just arrived on a scene, where it has detected the voice of a known ISIS executioner while simultaneously identifying the captive using facial recognition as being a known American hostage.

Now assume the drone is in a cave, and could not get a signal back to an operator in order to get verification, and did not have time to leave the cave to send video feedback and wait for a decision to be sent back. If the robot killed the executioner just before the prisoner was executed would it be an offensive or a defense weapon?

In this case, you are seeking out and killing an enemy in an offensive manor, but you are defending an innocent captive.

3. Deception

What if an enemy AI robotic drone is designed with the capability of faking or blocking the video feed that is sent back to a human controller from one of our friendly drones? It would then be necessary to design our friendly drones to be more intelligent so that they would be able to ascertain whether or not their video stream is being modified by an enemy AI robotic drone, and then implement countermeasures to prevent this.

You could eventually have a scenario where only intelligent AI robotic drones would be able to correctly ensure that they are receiving true visual identification of the enemy.

So even if a human is seeing a video feed of a target that he/she thinks is the enemy, they would still have to rely on the decision making capabilities of the friendly AI drone to ensure that the video feed has not been tampered with before engaging the target. When would it be OK to trust the machine in that scenario?

4. Cyborgs

In a more hypothetical but plausible future scenario, what if people begin to merge with machines to enhance their own capabilities as technology advances?

When would it be ok to kill them with a smart robot, and when would it require human verification? Would only cyborgs be able to get authorization to kill other cyborgs?

Or could any person who is a cyborg be viewed as a potential enemy AI drone? As has been demonstrated, it’s possible to hack an automobile, so it’s conceivable that one day it will be possible to hack someone with nanomachines in their brain, even without them knowing it, making them a walking weapon. What precautions should we take?

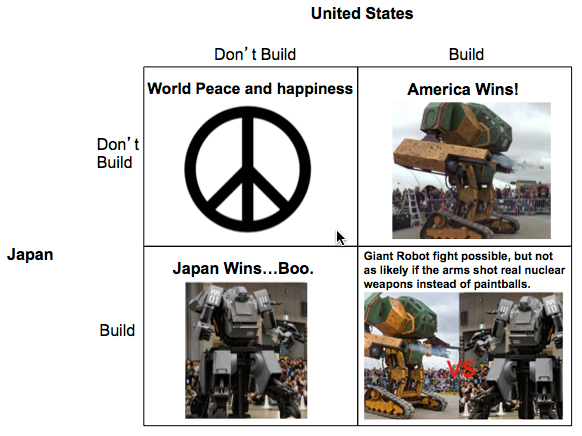

5. Prisoners’ dilemma

The following example is of a prisoners’ dilemma scenario showing a slightly humorous example of the problem that can be applied to autonomous systems.

Prisoner’s dilemma: to build or not build a huge killer mechwarrior robot — Megabot vs. Kuratas (photos credit: Kelsey Atherton)

Imagine if the armament shot nuclear weapons instead of paint balls, and the only effective system to defeat another giant nuke shooting killer robot was with another giant nuke shooting killer robot. It would make sense for all countries to denounce this as being bad to make in the first place, but at the end of the day you would not want to be without one if your adversaries might secretly create one.

Thus, the most rational decision for both nations in the scenario is to create a giant killer robot, even though it drains resources, and thus is sub-optional.

The treaty fallacy

The FLI open letter compared an AI ban to the international bans on chemical and biological weapons. For example, if a country like Syria breaks such a treaty and uses chemical weapons, you can retaliate with non-chemical or biological weapon systems.

“When everything is information, the ability of control your own information and disrupt your enemy’s communication, command, and control will be a primary determinant of military success.” — Ray Kurzweil, The Singularity Is Near. Kurzweil is also an advisor to the U.S. Army Science Board.

It’s easy from a defense standpoint to agree to a treaty affirming that you won’t use bioweapons or chemical weapons against anyone when you have enough nuclear weapons to blow up the world several times over. You’re really just negotiating on what types of destructive capabilities you get to use and which ones are unethical to use in order to create mass destruction.

But an international ban on developing autonomous AI weapons is a completely different situation for two reasons.

- Ease of proliferation and integration of new theory into practical applications. It is too difficult to prevent artificial intelligence technology that is developed for purely civilian purposes from being weaponized. Once a new technique for creating machine intelligence is discovered and shared with the general public, it is reasonable to assume that it may also be applied to the weapons development programs of our adversaries — even if friendly militaries adhere to an autonomous weapon system ban. Unlike nuclear, biological, or chemical proliferation threats, AI proliferation will not require hard-to-acquire materials or industrial capabilities.

- Does not adhere to the same basic rules of game theory as banning chemical and traditional biological weapons. Once an entity or organization has a significant advantage in militarized artificial intelligence (or possibly even a modest one) over their potential adversaries, they could use their superior abilities to infiltrate and take over the command and control of their enemy.

An international treaty banning chemical or biological weapons does not give an enemy who still develops these systems an absolute advantage over treaty-adhering states like the U.S., who still have other retaliatory capabilities, including both conventional and nuclear weapons. But militarized AI is a game changer in that you could lose your ability to retaliate against a threat if your command and control is taken over by an enemy AI system.

Thus, basic game theory would predict that banning the development of military capabilities with autonomous AI systems is a different situation. This is because enemy autonomous AI robotic drone systems could potentially even disrupt your ability to retaliate if you lack comparable abilities. This is a totally different scenario than preventing an enemy from using one weapon of mass destruction while you have others to retaliate with if they cheat.

As a result of the potential to disrupt an opponent’s command and control, and because the capability is globally distributed, you would have to get all nation states, non-government entities, and even individuals to agree, which is clearly not realistic.

Some time in the next few decades you might also have to get a consciously aware AI weapon to agree to the terms of the treaty as well!

Future Strategic Options

I don’t think the recent push against AI by leaders like Elon Musk or Steven Hawking will change competitive pressures to develop autonomous weapon systems.

Technologies like nanotechnology, AI, and genetic engineering will at some point pass a threshold where they become just as dangerous as nuclear, biological, and chemical weapons are today, but much easier to weaponize.

So we need to ensure that we are developing weapon systems, not just for the threats of today, but also for what we might face in the future. There may only be two options to defend against the potential misuse of these advanced technologies and prevent an existential destruction event. I personally think that option 2 is a much better way forward.

Option 1: World Totalitarian state

The first option is to monitor anything and everything to ensure that these technologies are not used as weapons.

This would be even more intensive than the Patriot Act. That’s because the barriers to entry are low. Weaponizing AI will take significantly less infrastructure and resources to develop than the nuclear, chemical, and biological weapons of today.

Option 2: Develop military capabilities to fight unforeseen threats

The second option is to develop and maintain defensive and offensive capabilities to deal with potential threats. You still have to monitor potential threats, but you do not have to invade the civil liberties of every person at all times. Whether it is robotic drones, a weaponized virus, or an even more hypothetical future threat like self-replicating nanomachines, a treaty is not going to defend against an attack.

Conclusion: arms race inevitable

The technology is already here, and advances in AI in general will create an environment where the continuous development of defensive capabilities will be mandatory. We can’t uninvent deep learning, image recognition algorithms, and supercomputers — despite the FLI’s sincere but misguided attempt to stop advancements in autonomous weapon system development.

So, short of some sort of unlikely magical global acceptance that we will all instantly self-enhance and transcend barbarism, an arms race is inevitable.

We will also need to make a strategic decision to ensure that we are developing defenses against autonomous weapon systems — not for just the threats of today, but to stay ahead of what we might face in the future.

Sam Wallace served as a U.S. Army officer from 2006 until 2012. He was the Battery Executive Officer and a Battle Captain for a Counter-Rocket, Artillery and Mortar Intercept mission based on Camp Victory near Baghdad, Iraq in 2008–2009. His unit utilized eight land-based Phalanx Weapon Systems during the deployment that were designed to shoot down enemy rockets and mortars. Later in 2010–2011, he commanded a mission to support the NATO security forces training mission in Afghanistan during the U.S. surge operation. His unit oversaw the training of more than 8,000 Afghan Security Force personnel at nine different basic training locations in eastern Afghanistan. He holds an MBA from Middle Tennessee State University and a B.S. in Mechanical Engineering from Virginia Military Institute.