This deep-learning method could predict your daily activities from your lifelogging camera images

October 12, 2015

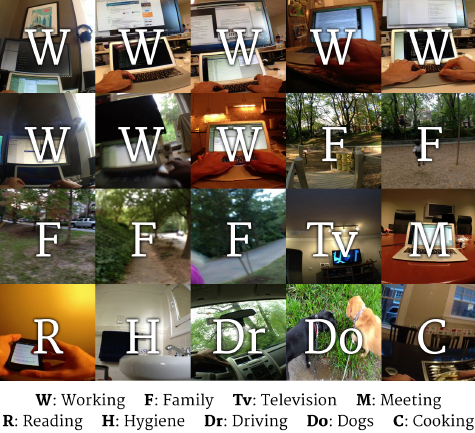

Example images from dataset of 40,000 egocentric images with their respective labels. The classes are representative of the number of images per class for the dataset. (credit: Daniel Castro et al./Georgia Institute of Technology)

Georgia Institute of Technology researchers have developed a deep-learning method that uses a wearable smartphone camera to track a person’s activities during a day. It could lead to more powerful Siri-like personal assistant apps and tools for improving health.

In the research, the camera took more than 40,000 pictures (one every 30 to 60 seconds) over a six-month period. The researchers taught a computer to categorize these pictures across 19 activity classes, including cooking, eating, watching TV, working, spending time with family, and driving. The test subject wearing the camera could review and annotate the photos at the end of each day (deleting any necessary for privacy) to ensure that they were correctly categorized.

Wearable smartphone camera used in the research (credit: Daniel Castro et al.)

It knows what you are going to do next

The method was then able to determine with 83 percent accuracy what activity a person was doing at a given time, based only on the images.

The researchers believe they have gathered the largest annotated dataset of first-person images used to demonstrate that deep-learning can “understand” human behavior and the habits of a specific person.

The researchers believe that within the next decade we will have ubiquitous devices that can improve our personal choices throughout the day.*

“Imagine if a device could learn what I would be doing next — ideally predict it — and recommend an alternative?” says Daniel Casto, a Ph.D. candidate in Computer Science and a lead researcher on the project, who helped present the method earlier this month at UBICOMP 2015 in Osaka, Japan. “Once it builds your own schedule by knowing what you are doing, it might tell you there is a traffic delay and you should leave sooner or take a different route.”

That could be based on a future version of a smartphone app like Waze, which allows drivers to share real-time traffic and road info. In possibly related news, Apple Inc. recently acquired Perceptio, a startup developing image-recognition systems for smartphones, using deep learning.

The open-access research, which was conducted in the School of Interactive Computing and the Institute for Robotics and Intelligent Machines at Georgia Tech, can be found here.

* Or not. “As more consumers purchase wearable tech, they unknowingly expose themselves to both potential security breaches and ways that their data may be legally used by companies without the consumer ever knowing,” TechRepublic notes.

Abstract of Predicting Daily Activities From Egocentric Images Using Deep Learning

We present a method to analyze images taken from a passive egocentric wearable camera along with the contextual information, such as time and day of week, to learn and predict everyday activities of an individual. We collected a dataset of 40,103 egocentric images over a 6 month period with 19 activity classes and demonstrate the benefit of state-of-theart deep learning techniques for learning and predicting daily activities. Classification is conducted using a Convolutional Neural Network (CNN) with a classification method we introduce called a late fusion ensemble. This late fusion ensemble incorporates relevant contextual information and increases our classification accuracy. Our technique achieves an overall accuracy of 83.07% in predicting a person’s activity across the 19 activity classes. We also demonstrate some promising results from two additional users by fine-tuning the classifier with one day of training data.