We could get to the singularity in ten years

December 26, 2014 by Ben Goertzel

It would require a different way of thinking about the timing of the Singularity, says AGI pioneer Ben Goertzel, PhD. Rather than a predictive exercise, it would require thinking about it the way an athlete thinks about a game when going into it, or the way the Manhattan Project scientists thought at the start of the project.

It would require a different way of thinking about the timing of the Singularity, says AGI pioneer Ben Goertzel, PhD. Rather than a predictive exercise, it would require thinking about it the way an athlete thinks about a game when going into it, or the way the Manhattan Project scientists thought at the start of the project.

This article, written in 2010, is excerpted with permission from Goertzel’s new book, Ten Years To the Singularity If We Really, Really Try … and other Essays on AGI and its Implications.

We’ve discussed the Vinge-ean, Kurzweil-ian argument that human-level AGI may be upon us shortly.

By extrapolating various key technology trends into the near future, in the context of the overall dramatic technological growth the human race has seen in the past centuries and millennia, it seems quite plausible that superintelligent artificial minds will be here much faster than most people think.

This sort of objective, extrapolative view of the future has its strengths, and is well worth pursuing. But I think it’s also valuable to take a more subjective and psychological view, and think about AGI and the Singularity in terms of the power of the human spirit — what we really want for ourselves, and what we can achieve if we really put our minds to it.

I presented this sort of perspective on the timeline to Singularity and advanced AGI at the TransVision 2006 futurist conference, in a talk called “Ten Years to a Positive Singularity (If We Really, Really Try).” The conference was in Helsinki, Finland, and I wasn’t able to attend in person so I delivered the talk by video.

The basic point of the talk was that if society put the kind of money and effort into creating a positive Singularity that we put into things like wars or television shows, then some pretty amazing things might happen. To quote a revised version of the talk, given to a different audience just after the financial crisis of Fall 2008:

Look at the US government’s response to the recent financial crisis – suddenly they’re able to materialize a trillion dollars here, a trillion dollars there. What if those trillions of dollars were being spent on AI, robotics, life extension, nanotechnology and quantum computing? It sounds outlandish in the context of how things are done now — but it’s totally plausible.

If we made a positive Singularity a real focus of our society, I think a ten year time-frame or less would be eminently possible.

Ten years from now would be 2020. Ten years from 2007, when that talk was originally given, would have been 2017, only 7 years from now. Either of these is a long time before Kurzweil’s putative 2045 prediction. Whence the gap?

When he cites 2045, Kurzweil is making a guess of the “most likely date” for the Singularity. The “ten more years” prediction is a guess of how fast things could happen with an amply-funded, concerted effort toward a beneficial Singularity. So the two predictions have different intentions.

We consider Kurzweil’s 2045 as a reasonable extrapolation of current trends, but we also think the Singularity could come a lot sooner, or a lot later, than that.

How could it come a lot later? Some extreme possibilities are easy to foresee. What if terrorists nuke the major cities of world? What if anti-technology religious fanatics take over the world’s governments? But less extreme outcomes could also occur, with similar outcomes. Human history could just take a different direction than massive technological advance, and be focused on warfare, or religion, or something else.

Or, though we reckon this less likely, it is also possible we could hit up against tough scientific obstacles that we can’t foresee right now. Intelligence could prove more difficult for the human brain to puzzle out, whether via analyzing neuroscience data, or engineering intelligent systems.

Moore’s Law and its cousins could slow down due to physical barriers, designing software for multicore architectures could prove problematically difficult — the pace of improvement in brain scanners could slow down.

How, on the other hand, could it take a lot less time? If the right people focus their attention on the right things.

Dantzig’s solution to ‘unsolvable’ statistics problems

The Ten Years to the Singularity talk began with a well-known motivational story, about a guy named George Dantzig (no relation to the heavy metal singer Glenn Danzig!). Back in 1939, Dantzig was studying for his PhD in statistics at the University of California, Berkeley. He arrived late for class one day and found two problems written on the board. He thought they were the homework assignment, so he wrote them down, then went home and solved them. He thought they were particularly hard, and it took him a while. But he solved them, and delivered the solutions to the teacher’s office the next day.

Turns out, the teacher had put those problems on the board as examples of “unsolvable” statistics problems — two of the greatest unsolved problems of mathematical statistics in the world, in fact. Six weeks later, Dantzig’s professor told him that he’d prepared one of his two “homework” proofs for publication. Eventually, Dantzig would use his solutions to those problems for his PhD thesis.

Here’s what Dantzig said about the situation: “If I had known that the problems were not homework, but were in fact two famous unsolved problems in statistics, I probably would not have thought positively, would have become discouraged, and would never have solved them.”

Dantzig solved these problems because he thought they were solvable. He thought that other people had already solved them. He was just doing them as “homework,” thinking everyone else in his class was going to solve them too.

There’s a lot of power in expecting to win. Athletic coaches know about the power of streaks. If a team is on a roll, they go into each game expecting to win, and their confidence helps them see more opportunities to win. Small mistakes are just shrugged away by the confident team, but if a team is on a losing streak, they go into each game expecting to screw up, somehow.

Oak Ridge K-25 plant, part of the Manhattan Project (credit: Wikimedia Commons)

A single mistake can put them in a bad mood for the whole game, and one mistake can pile on top of another more easily.

To take another example, let’s look at the Manhattan Project. America thought they needed to create nuclear weapons before the Germans did.

They assumed it was possible, and felt a huge burning pressure to get there first. Unfortunately, what they were working on so hard, with so much brilliance, was an ingenious method for killing a lot of people.

But, whatever you think of the outcome, there’s no doubt the pace of innovation in science and technology in that project was incredible.

And it all might have never happened if the scientists involved didn’t already believe that Germany was ahead of them, and that somehow their inventing the ability to kill thousands, first, would save humanity.

How Might a Positive Singularity Get Launched In 10 Years From Now?

This way of thinking leads to a somewhat different way of thinking about the timing of the Singularity. What if, rather than thinking about it as a predictive exercise (an exercise in objective studying what’s going to happen in the world, as if we were outsiders to the world). What if we thought about it the way an athlete thought about a game when going into it, or the way the Manhattan Project scientists thought at the start of the project, or the way Dantzig thought about his difficult homework problems?

- What if we knew it was possible to create a positive Singularity in ten years?. What if we assumed we were going to win, as a provisional but reasonable hypothesis?

- What if we thought everyone else in the class knew how to do it already?

- What if we were worried the bad guys were going to get there first?

- Under this assumption, how then would we go about trying to create a positive Singularity?

- Following this train of thought, even just a little ways, will lead you along the chain of reasoning that led us to write this book.

- One conclusion that seems fairly evident when taking this perspective is that AI is the natural area of focus.

Look at the futurist technologies at play these days — nanotechnology, biotechnology, robotics, AI — and ask, “which ones have the most likelihood of bringing us a positive Singularity within the next ten years?”

Nano and bio and robotics are all advancing fast, but they all require a lot of hard engineering work.

AI requires a lot of hard work too, but it’s a softer kind of hard work. Creating AI relies only on human intelligence, not on painstaking and time-consuming experimentation with physical substances and biological organisms.

And how can we get to AI? There are two big possibilities:

- Copy the human brain, or

- Come up with something cleverer

Copying the brain is the wrong approach

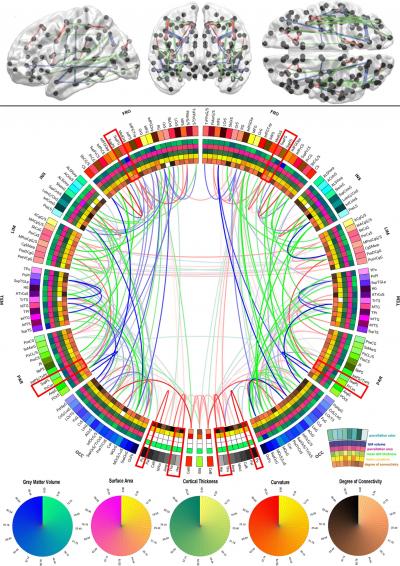

A graphical representation of human brain connectivity scaffold (credit: USC Institute for Neuroimaging and Informatics)

Both approaches seem viable, but the first approach has a problem. Copying the human brain requires far more understanding of the brain than we have now. Will biologists get there in ten years from now. Probably not. Definitely not in five years.

So we’re left with the other choice, come up with something cleverer. Figure out how to make a thinking machine, using all the sources of knowledge at our disposal: Computer science and cognitive science and philosophy of mind and mathematics and cognitive neuroscience and so forth.

But if this is feasible to do in the near term, which is what we’re suggesting, then why don’t have AI’s smarter than people right now? Of course, it’s a lot of work to make a thinking machine, but making cars and rockets and televisions is also a lot of work, and society has managed to deal with those problems.

The main reason we don’t have real AI right now is that almost no one has seriously worked on the problem. And (here is where things get even more controversial!) most of the people that have worked on the problem have thought about it in the wrong way.

Some people have thought about AI in terms of copying the brain, but, as I mentioned earlier, that means you have to wait until the neuroscientists have finished figuring out the brain. Trying to make AI based on our current, badly limited understanding of the brain is a clear recipe for failure.

We have no understanding yet of how the brain represents or manipulates abstraction. Neural network AI is fun to play with, but it’s hardly surprising it hasn’t led to human-level AI yet. Neural nets are based on extrapolating a very limited understanding of a few very narrow aspects of brain function.

The AI scientists who haven’t thought about copying the brain, have mostly made another mistake. They’ve thought like computer scientists. Computer science is like mathematics — it’s all about elegance and simplicity. You want to find beautiful, formal solutions. You want to find a single, elegant principle. A single structure. A single mechanism that explains a whole lot of different things. A lot of modern theoretical physics is in this vein. The physicists are looking for a single, unifying equation underlying every force in the universe. Well, most computer scientists working on AI are looking for a single algorithm or data structure underlying every aspect of intelligence.

But that’s not the way minds work. The elegance of mathematics is misleading. The human mind is a mess, and not just because evolution creates messy stuff. The human mind is a mess because intelligence, when it has to cope with limited computing resources, is necessarily messy and heterogenous.

Intelligence does include a powerful, elegant, general problem-solving component, and some people have more of it than others. Some people I meet seem to have almost none of it at all.

But intelligence also includes a whole bunch of specialized problem-solving components dealing with things like: vision, socialization, learning physical actions, recognizing patterns in events over time, and so forth. This kind of specialization is necessary if you’re trying to achieve intelligence with limited computational resources.

Marvin Minsky has introduced the metaphor of a society. He says a mind needs to be a kind of society, with different agents carrying out different kinds of intelligent actions and all interacting with each other.

But a mind isn’t really like a society. It needs to be more tightly integrated than that. All the different parts of the mind, parts which are specialized for recognizing and creating different kinds of patterns, need to operate very tightly together, communicating in a common language, sharing information, and synchronizing their activities.

And then comes the most critical part: The whole thing needs to turn inwards on itself. Reflection. Introspection. These are two of the most critical kinds of specialized intelligence that we have in the human brain, and both rely critically on our general intelligence ability. A mind, if it wants to be really intelligent, has to be able to recognize patterns in itself, just like it recognizes patterns in the world, and it has to be able to modify and improve itself based on what it sees in itself. This is what “self” is all about.

This relates to what the philosopher Thomas Metzinger calls the “phenomenal self.” All humans carry around inside our minds a “phenomenal self.” An illusion of a holistic being. A whole person. An internal “self” that somehow emerges from the mess of information and dynamics inside our brains. This illusion is critical to what we are. The process of constructing this illusion is essential to the dynamics of intelligence.

Brain theorists haven’t understood the way the self emerges from the brain yet, because brain mapping isn’t advanced enough.

It’s about patterns

Computer scientists haven’t understood the self, because it isn’t about computer science. It’s about the emergent dynamics that happen when you put a whole bunch of general and specialized pattern recognition agents together: A bunch of agents created in a way that they can really cooperate, and when you include in the mix agents oriented toward recognizing patterns in the society as a whole.

The specific algorithms and representations inside the pattern recognition agents – algorithms dealing with reasoning, or seeing, or learning actions, or whatever – these algorithms are what computer science focuses on. They’re important, but they’re not really the essence of intelligence. The essence of intelligence lies in getting the parts to all work together in a way that gives rise to the phenomenal self. That is, it’s about wiring together a collection of structures and processes into a holistic system in a way that lets the whole system recognize significant patterns in itself. With very rare exceptions, this has simply not been the focus of AI researchers.

When talking about AI in these pages, I’ll use the word “patterns” a lot. This is inspired in part by my book, The Hidden Pattern, which tries to get across the viewpoint that everything in the universe is made of patterns. This is not a terribly controversial perspective — Kurzweil has also described himself as a “patternist.” In the patternist perspective, everything you see around you, everything you think, everything you remember, that’s a pattern!

Following a long line of other thinkers in psychology and computer science, we conceive intelligence as the ability to achieve complex goals in complex environments. Even complexity itself has to do with patterns. Something is “complex” if it has a lot of patterns in it.

A “mind” is a collection of patterns for effectively recognizing patterns. Most importantly, a mind needs to recognize patterns about what actions are most likely to achieve its goals.

The phenomenal self is a big pattern, and what makes a mind really intelligent is its ability to continually recognize this pattern — the phenomenal self in itself.

Does it Take a Manhattan Project?

One of the more interesting findings from the “How Long Till Human-Level AI” survey we discussed above was about funding, and the likely best uses of hypothetical massive funding to promote AGI progress.

In the survey, we used the Manhattan Project as one of our analogies, just as I did in part of the discussion above — but in fact, it may be that we don’t need a Manhattan Project scale effort to get Singularity-enabling AI. The bulk of AGI researchers surveyed at AGI-09 felt that, rather than a single monolithic project, the best use of massive funding to promote AGI would be to fund a heterogenous pool of different projects, working in different but overlapping directions.

In part, this reflects the reality that most of the respondents to the survey probably thought they had an inkling (or a detailed understanding) of a viable path to AGI, and feared that an AGI Manhattan Project would proceed down the wrong path instead of their “correct” path. But it also reflects the realities of software development. Most breakthrough software has come about through a small group of very brilliant people working together very tightly and informally. Large teams work better for hardware engineering than software engineering.

It seems most likely that the core breakthrough enabling AGI will come from a single, highly dedicated AGI software team. After this breakthrough is done, a large group of software and hardware engineers will probably be useful for taking the next step, but that’s a different story.

What this suggests is that, quite possibly, all we need right now to get Singularity-enabling AGI is to get funding to a dozen or so of the right people. This would enable them to work on the right AGI project full time for a decade or so, or maybe even less.

It’s worth emphasizing that my general argument for the potential imminence of AGI does not depend on my perspective on any particular route to AGI being feasible. Unsurprisingly, I’m a big fan of the OpenCog project, of which I’m one of the founders and leaders. I’ll tell you more about this a little later on. But you don’t need to buy my argument for OpenCog as the most likely path to AGI, in order to agree with my argument for creating a positive Singularity by funding a constellation of dedicated AGI teams.

Even if OpenCog were the wrong path, there could still be a lot of sense in a broader bet that funding 100 dedicated AGI teams to work on their own independent ideas will result in one of them making the breakthrough. What’s shocking, given the amount of money and energy going into other sorts of technology development, is that this isn’t happening right now. (Or maybe it is, by the time you are reading this!!)

Keeping it Positive

I’ve talked more about AI than about the Singularity or positiveness. Let me get back to those.

It should be obvious that if you can create an AI vastly smarter than humans, then pretty much anything is possible.

Or at least, once we reach that stage, there’s no way for us, with our puny human brains, to really predict what is or isn’t possible. Once the AI has its own self, and has superhuman level intelligence, it’s going to start learning and figuring things out on its own.

But what about the “positive” part? How do we know this AI won’t annihilate us all? Why won’t it just decide we’re a bad use of mass-energy, and re-purpose our component particles for something more important?

There’s no guarantee of this not happening, of course.

Just like there’s no guarantee that some terrorist won’t nuke your house tonight, or that you won’t wake up tomorrow morning to find the whole life you think you’ve experienced has been a long strange dream. Guarantees and real life don’t match up very well. (Sorry to break the news.)

However, there are ways to make bad outcomes unlikely, based on a rational analysis of AI technology and the human context in which it’s being developed.

The goal systems of humans are pretty unpredictable, but a software mind can potentially be different, because the goal system of an AI can be more clearly and consistently defined. Humans have all sort of mixed-up goals, but there seems no clear reason why one can’t create an AI with a more crisply defined goal of helping humans and other sentient beings, as well as being good to itself. We will return to the problem of defining this sort of goal more precisely later, but to make a long story short, one approach (among many) is to set the AI the goal of figuring out what is most common among the various requests that various humans may make of it.

One risk of course is that, after it grows up a bit, the AGI changes its goals, even though you programmed it not to. Every programmer knows you can’t always predict the outcome of your own code. But there are plenty of preliminary experiments we can do to understand the likelihood of this happening. And there are specific AGI designs, such as the GOLEM design we’ll discuss below, that have been architected with a specific view toward avoiding this kind of pathology. This is a matter best addressed by a combination of experimental science and mathematical theory, rather than armchair philosophical speculation.

Ten Years to the Singularity?

How long till human-level or superhuman AGI? How long till the Singularity? None of us knows. Ray Kurzweil and others have made some valuable projections and predictions. But you and I are not standing outside of history analyzing its progress — we are co-creating it. Ultimately the answer to this question is highly uncertain, and, among many other factors, it depends on what we do.

To quote the closing words of the Ten Years to The Singularity TransVision talk:

A positive Singularity in 10 years?

Am I sure it’s possible? Of course not.

But I do think it’s plausible.

And I know this: If we assume it isn’t possible, it won’t be.

And if we assume it is possible – and act intelligently on this basis – it really might be. That’s the message I want to get across to you today.

There may be many ways to create a positive Singularity in ten years. The way I’ve described to you – the AI route – is the one that seems clearest to me. There are six billion people in the world so there’s certainly room to try out many paths in parallel.

But unfortunately the human race isn’t paying much attention to this sort of thing. Incredibly little effort and incredibly little funding goes into pushing toward a positive Singularity. I’m sure the total global budget for Singularity-focused research is less than the budget for chocolate candy – let alone beer … Or TV… Or weapons systems!

I find the prospect of a positive Singularity incredibly exciting – and I find it even more exciting that it really, possibly could come about in the next ten years. But it’s only going to happen quickly if enough of the right people take the right attitude – and assume it’s possible, and push for it as hard as they can.

Remember the story of Dantzig and the unsolved problems of statistics. Maybe the Singularity is like that. Maybe superhuman AI is like that. If we don’t think about these problems as impossibly hard – quite possibly they’ll turn out to be solvable, even by mere stupid humans like us.

This is the attitude I’ve taken with my work on OpenCog. It’s the attitude Aubrey de Grey has taken with his work on life extension. The more people adopt this sort of attitude, the faster the progress we’ll make.

We humans are funny creatures. We’ve developed all this science and technology – but basically we’re still funny little monkeylike creatures from the African savannah. We’re obsessed with fighting and reproduction and eating and various apelike things. But if we really try, we can create amazing things – new minds, new embodiments, new universes, and new things we can’t even imagine.