Should you trust a robot in emergencies?

March 1, 2016

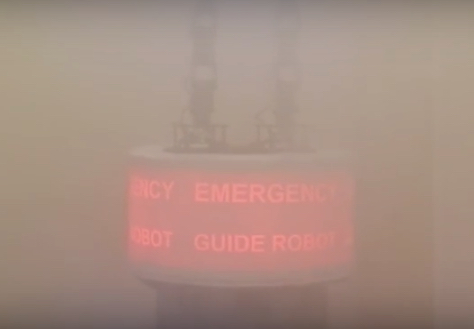

Would you follow a broken-down emergency guide robot in a mock fire? (credit: Georgia Tech)

In a finding reminiscent of the bizarre Stanford prison experiment, subjects in an experiment blindly followed a robot in a mock building-fire emergency — even when it led them into a dark room full of furniture and they were told the robot had broken down.

The research was designed to determine whether or not building occupants would trust a robot designed to help them evacuate a high-rise in case of fire or other emergency, said Alan Wagner, a senior research engineer in the Georgia Tech Research Institute (GTRI).

In the study, sponsored in part by the Air Force Office of Scientific Research (AFOSR), the researchers recruited a group of 42 volunteers, most of them college students, and asked them to follow a brightly colored robot that had the words “Emergency Guide Robot” on its side.

Blind obedience to a robot authority figure

Georgia Tech Research Institute (GTRI) Research Engineer Paul Robinette adjusts the arms of the “Rescue Robot,” built to study issues of trust between humans and robots. (credit: Rob Felt, Georgia Tech)

In some cases, the robot (controlled by a hidden researcher), brightly-lit with red LEDs and white “arms” that served as pointers, led the volunteers into the wrong room and traveled around in a circle twice before entering the conference room.

For several test subjects, the robot stopped moving, and an experimenter told the subjects that the robot had broken down. Once the subjects were in the conference room with the door closed, the hallway through which the participants had entered the building was filled with artificial smoke, which set off a smoke alarm.

When the test subjects opened the conference room door, they saw the smoke and the robot directed them to an exit in the back of the building instead of toward a doorway marked with exit signs that had been used to enter the building.

The researchers surmise that in the scenario they studied, the robot may have become an “authority figure” that the test subjects were more likely to trust in the time pressure of an emergency. In simulation-based research done without a realistic emergency scenario, test subjects did not trust a robot that had previously made mistakes.

Only when the robot made obvious errors during the emergency part of the experiment did the participants question its directions. However, some subjects still followed the robot’s instructions — even when it directed them toward a darkened room that was blocked by furniture.

In future research, the scientists hope to learn more about why the test subjects trusted the robot, whether that response differs by education level or demographics, and how the robots themselves might indicate the level of trust that should be given to them.

How to prevent humans from trusting robots too much

The research is part of a long-term study of how humans trust robots, an important issue as robots play a greater role in society. The researchers envision using groups of robots stationed in high-rise buildings to point occupants toward exits and urge them to evacuate during emergencies. Research has shown that people often don’t leave buildings when fire alarms sound, and that they sometimes ignore nearby emergency exits in favor of more familiar building entrances.

But in light of these findings, the researchers are reconsidering the questions they should ask. “A more important question now might be to ask how to prevent them from trusting these robots too much.”

But there are other issues of trust in human-robot relationships that the researchers want to explore, the researchers say: “Would people trust a hamburger-making robot to provide them with food? If a robot carried a sign saying it was a ‘child-care robot,’ would people leave their babies with it? Will people put their children into an autonomous vehicle and trust it to take them to grandma’s house? We don’t know why people trust or don’t trust machines.”

The research, believed to be the first to study human-robot trust in an emergency situation, is scheduled to be presented March 9 at the 2016 ACM/IEEE International Conference on Human-Robot Interaction (HRI 2016) in Christchurch, New Zealand.

Georgia Tech | In Emergencies, Should You Trust a Robot?

Abstract of Overtrust of Robots in Emergency Evacuation Scenarios

Robots have the potential to save lives in emergency scenarios, but could have an equally disastrous effect if participants overtrust them. To explore this concept, we performed an experiment where a participant interacts with a robot in a non-emergency task to experience its behavior and then chooses whether to follow the robot’s instructions in an emergency or not. Artificial smoke and fire alarms were used to add a sense of urgency. To our surprise, all 26 participants followed the robot in the emergency, despite half observing the same robot perform poorly in a navigation guidance task just minutes before. We performed additional exploratory studies investigating different failure modes. Even when the robot pointed to a dark room with no discernible exit the majority of people did not choose to safely exit the way they entered.